Artificial Intelligence (AI) is rapidly integrating into business operations, and the AI security landscape is evolving just as quickly to address emerging threats. In this new era, AI security isn’t merely about technical defenses — it’s the foundational element for:

- Achieving AI compliance.

- Navigating the regulatory landscape.

As a result, traditional cybersecurity services must now expand to address AI-targeted vulnerabilities and governance requirements.

This article provides a strategic framework to build trustworthy AI systems by addressing the dual challenge of:

1. Staying ahead of evolving cyber threats.

2. Meeting stringent data privacy and regulatory requirements.

To begin, let’s explore the specific threats that define this evolving attack surface.

AI Systems Introduce a Unique Attack Surface

When you assess your security posture — in contrast to traditional cybersecurity, which focuses on data and networks — the AI attack surface exposes distinct vulnerabilities that take advantage of how AI systems learn and function.

- Data Poisoning: One of the most significant threats — attackers secretly inject malicious inputs within training data. This compromises AI models before they are even deployed, which can lead to flawed business decisions or discriminatory outcomes.

- Prompt Injection Attacks: Exploit how AI models process input — allowing attackers to manipulate systems using carefully crafted inputs that bypass safety measures. When an AI assistant encounters hidden instructions, for example, its behavior can be redirected to perform unauthorized actions or reveal sensitive information.

- Model Theft: Cybercriminals steal proprietary AI models that an organization has invested years and significant resources into developing.

- Autonomous AI Agents: Introduce new security challenges due to their capacity for independent decision-making. The APIs used to connect these agents can become vulnerabilities if not properly secured — creating new entry points for attackers. As these AI agents become more interconnected, this AI attack surface only continues to grow — creating an ongoing ecosystem of vulnerability.

These technical vulnerabilities are not isolated incidents — they represent a fundamental shift in the AI security landscape. Understanding these threats is critical, as each security flaw represents a potential compliance failure — a direct link we will explore next.

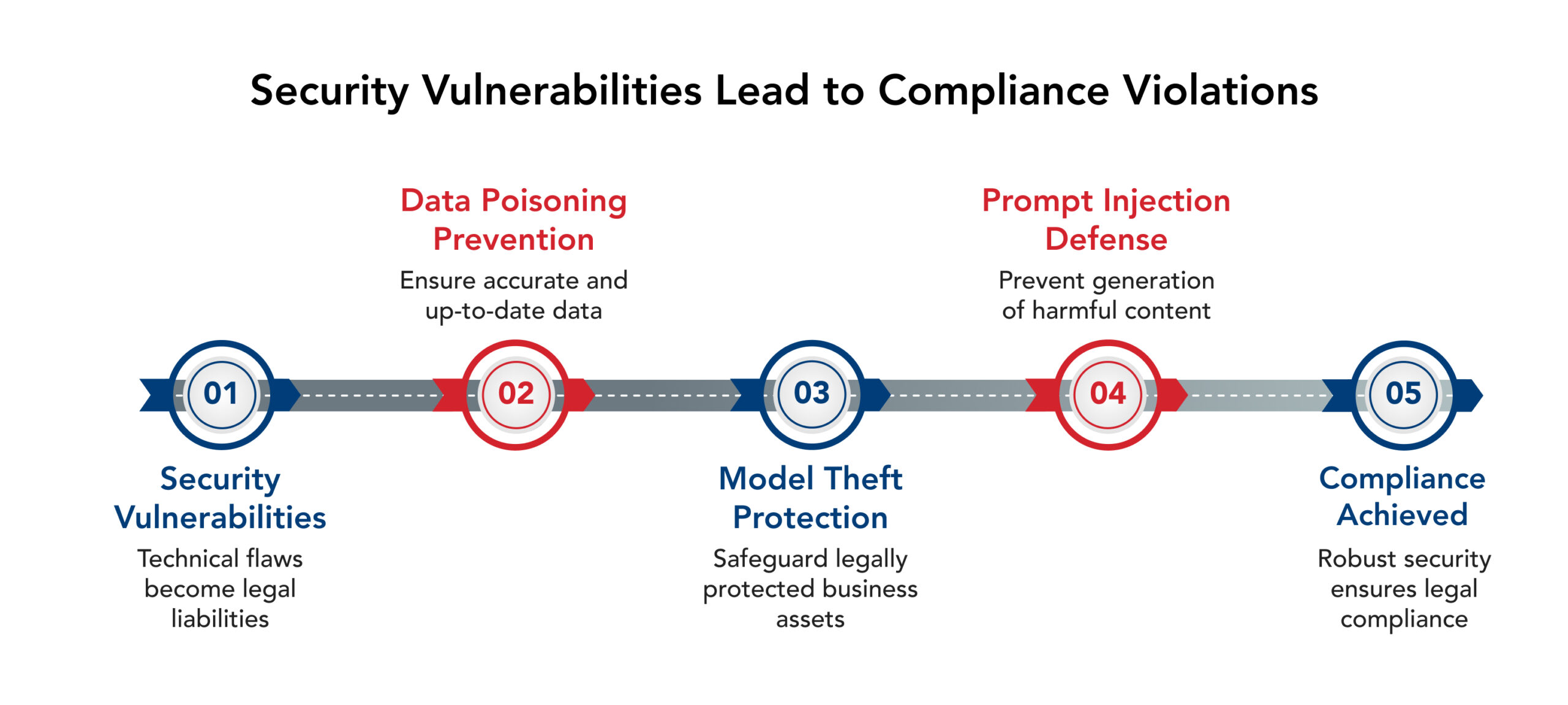

How Security Vulnerabilities Become Compliance Violations

That direct link from technical flaw to legal liability becomes clear when you decode the AI security landscape through a compliance lens.

- Data Poisoning isn’t just a technical issue.➔ When malicious actors contaminate training data, the resulting biased or inaccurate AI outputs can violate expectations outlined in the NIST AI Risk Management Framework (AI RMF) — which emphasizes data integrity, reliability, and responsible AI behavior. If the compromised data involves personal information, it may also conflict with obligations under applicable U.S. state privacy laws.

- Model Theft presents dual threats that extend far beyond a simple security breach.➔ When competitors extract proprietary AI models through model inversion attacks, the organization risks losing intellectual property and exposing sensitive information used in the training process — potentially incurring liability under U.S. trade secret law and facing enforcement actions under the FTC Act, which penalizes inadequate security practices.

- Prompt Injection Attacks also evolve from technical exploits into liability landmines for your organization.➔ If attackers manipulate your AI system to generate harmful, deceptive, or privacy-violating content, your organization may face consequences under FTC AI safety guidelines. In regulated sectors such as healthcare, HIPAA requirements also apply when protected health information is involved.

Ultimately, every security vulnerability becomes a legal vulnerability — demonstrating that robust security is the non-negotiable prerequisite for compliance.

This direct “Threat-Regulation Mapping” highlights the urgent need for a new strategic approach, one that builds security and compliance into AI systems from the very beginning — let’s explore this next.

Also Read: The Importance of Cybersecurity Strategies for Small Businesses

Adopt a Proactive Stance With a Secure by Design Philosophy

To adequately manage AI risks, you must shift from reactive, bolt-on security measures to a proactive approach that embeds security across the full AI development lifecycle — the essence of a “Secure by Design” philosophy, which integrates security from the initial design phase through deployment and monitoring.

The Secure by Design approach is supported by Defense in Depth (DiD) — a strategy that implements multi-layered security controls — including access controls and continuous monitoring. By employing this layered security approach, DiD ensures resilience by containing threats even if individual controls fail — preventing single points of failure.

To put these strategies into practice, let’s examine the Cybersecurity & Infrastructure Security Agency (CISA) Secure by Design framework, providing three core principles for implementation:

1. Ensuring Accountability for Customer Security Results: Establishes security as a shared, executive-led responsibility — ensuring that customer security is a business priority.

2. Embracing Radical Transparency and Accountability: Requires detailed documentation of model provenance and lineage — including ML Bill of Materials (ML-BOMs) — to build trust and ensure compliance.

3. Leading From the Top: Ensures that executive buy-in translates into concrete actions and resource allocation — making security a fundamental objective.

So, what operationalizes these principles? Enter Machine Learning Security Operations (MLSecOps) — the practical framework that applies DevSecOps principles within machine learning workflows.

MLSecOps:

- Addresses AI-targeted vulnerabilities from data ingestion to model deployment and monitoring.

- Maintains transparency through detailed documentation.

By fostering accountability and providing tangible evidence, MLSecOps helps secure executive support and cultivates a true Secure by Design culture. However, implementing these technical and strategic frameworks requires more than tools — it demands a fundamental shift in organizational collaboration, which we will explore in the next section.

Building an Integrated Framework for AI Risk Management

Security should never be an afterthought or left entirely to security teams — it should remain a core commitment integrated into AI development, and this is exactly why collaboration is crucial in the AI security landscape.

Before implementing specific tools, understand that an “Integrated Risk Management” program breaks down silos — uniting Security Teams, Legal Counsel, and Compliance Officers in a shared effort.

To achieve this, you can move beyond reactive compliance checklists with a multi-pronged approach that includes the following:

- Threat-Regulation Mapping involves creating a crosswalk between AI security threats and regulatory obligations — thereby transforming security documentation into compliance evidence.

- When conducting security assessments, shift from asking “Is this secure?” to “What compliance risks does this gap create?” — this reframing ties technical issues directly to legal mandates.

- Develop Joint Security-Compliance Playbooks that Security Teams can implement and Legal Counsel can audit — bridging the technical-legal communication gap.

By developing a shared vocabulary, you can translate technical concepts; for example, reframe “adversarial examples” as “regulatory exposure vectors’ to make threats relevant to legal teams. Implement a compliance impact scorecard to quantify regulatory risk for vulnerabilities — helping prioritize fixes and demonstrate compliance efforts.

There are significant benefits to creating executive briefing templates that present security investments as compliance risk mitigation — securing leadership support for proactive risk management.

Ultimately, implementing these collaborative practices transforms security and compliance from siloed functions into a unified engine for proactive risk management — setting the stage for building lasting stakeholder trust.

Unifying Security and Compliance is Key to Trustworthy AI

By treating the AI security landscape as your compliance foundation, you transform regulatory burdens into business enablers that build stakeholder trust and drive innovation. A proactive “Secure by Design” philosophy and continuous risk management are essential for navigating the evolving AI environment and ensuring sustainable initiatives.

This is where expert guidance becomes critical. At CMIT Solutions of Chevy Chase and Silver Spring, we provide expert IT services that help businesses adopt AI confidently through a secure AI framework. Our team designs and implements intelligent AI tools within controlled, secure environments that prioritize:

- Data protection and privacy safeguards

- Defense against modern AI-specific cyber threats

- Adherence to evolving AI, data, and privacy regulations

- Specialized compliance services to support audits, documentation, and governance

While our primary office is in Silver Spring, CMIT Solutions of Silver Spring is dedicated to delivering expert IT support, proactive cybersecurity, and reliable technology solutions to businesses throughout the region. This includes our valued clients in Rockville, Derwood, Chevy Chase, Olney, Burtonsville, and Highland. We’re committed to being your trusted IT partner for local businesses.

Connect with us today to schedule your comprehensive IT assessment and discover reliable, secure, and compliant technology solutions tailored to your business goals!